A Simple Machine

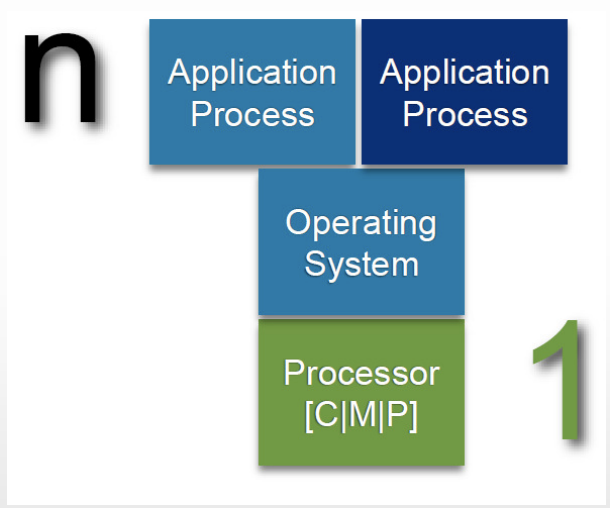

- A machine makes available computing and storage resources

- computing resource, eg. CPU

- storage resource, eg. a given amount of primary memory

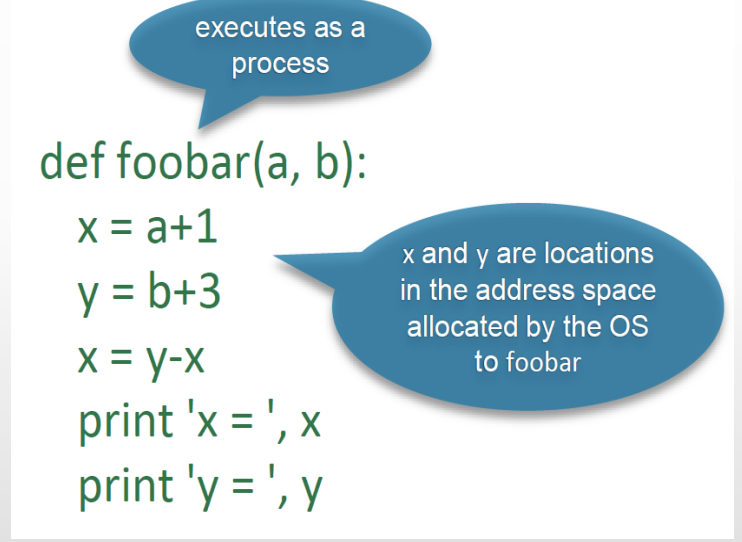

- A process is an executing instance of a program

- Computing and Resource usage are typically controlled by and operating system

- an OS aims to make the most efficient use possible of those resources

- the OS assigns a unique identity to each process and then controls how a process is granted access to computing resources

- the OS also controls how a process is granted access to storage resoures by assigning an address space to that process

- when the OS ensures that each process P has a single address space A that is exclusive to P, we are allowed a sequential reading of the steps that comprise the process

Sequential reading of the steps that comprise the process

- The OS ensures that no other process tampers with x and y, and only foobar has access to x and y

Sequential processing

- if foo and bar take long to run, we wish to run them concurrently, in different processes, and even better, in parallel, in different processors

Sequential, isolated processing is simple, but bounded and limiting

- Non sequential, non isolated processing expands the bounds and limits with respect to performance

Sequential vs. Multi-Processing, Concurrent, Parallel and Distributed Computing

Multi-Processing vs. Multi-Tasking

- Two different concepts

- An OS multi tasks by:

- allowing more than one process to be underway by controlling how each one makes use of the resources allocated to it

- implementing a scheduling policy, which grants each active process a time slice during which it can access the resources allocated to it

- In multi-tasking, processes are not really exeuting concurrently

- the appearance of concurrent execution stems from an effective scheduling policy

- If all processes get a fair share of the resource and they get it sufficiently often, it seems to users that all processes are execcuting concurrently

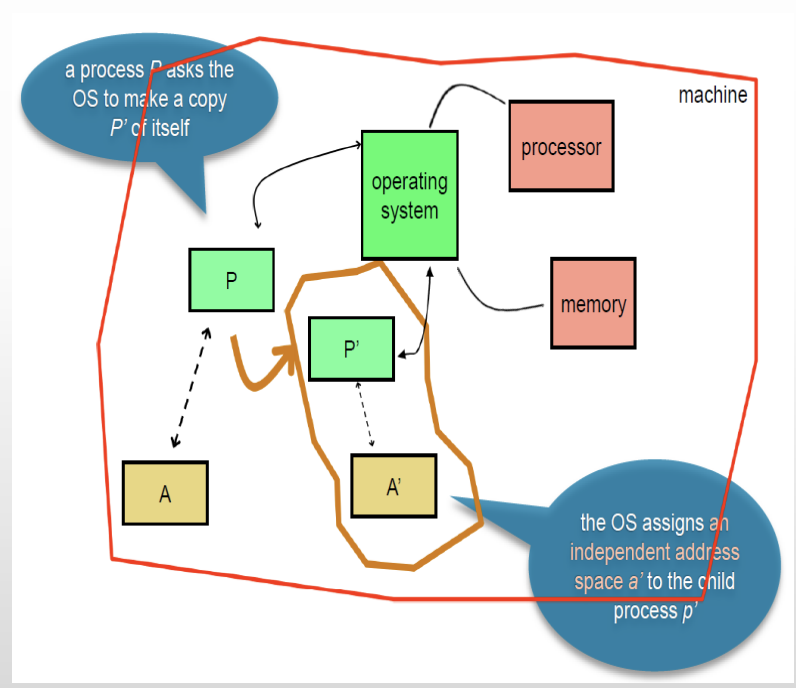

Multi-Processing by Forking

causes two copies of itself to be active concurrently

The child process is given a copy of the parent process’s address space. The address spaces are distince

- the child process starts executing after the OS call

- the parent can continue or wait for the child to execute

- the parent must find out how and when the child completes execution

because of the copying, forking can be expansive

modern OSs have strategies that make the actual cost quite affordable

forking is reasonably safe because the address spaces are distinct

When forking is used in multi-processing, consistency of processing results is more likely to occur than in cases where multi-processing is achieved by threading

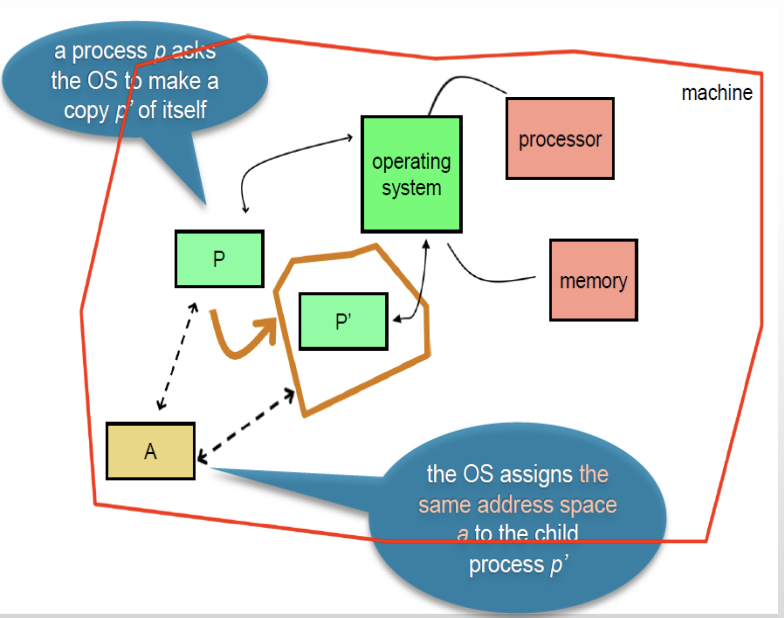

Multi-Processing by Threading

if parent and child need to interact and share, threading may be a better approach to multi-tasking

with threading, the address space is not copied, it is shared

this means that if one process changes a variable, all other processes see it

this makes threading less expensive, but also less face than forking

Concurrent Computing

consider **many ** application processes

processes are often threads (the OS schedules the execution of n copies of process Pi, 1<= i <= n, to run in the same processor, typically sharing a single address space)

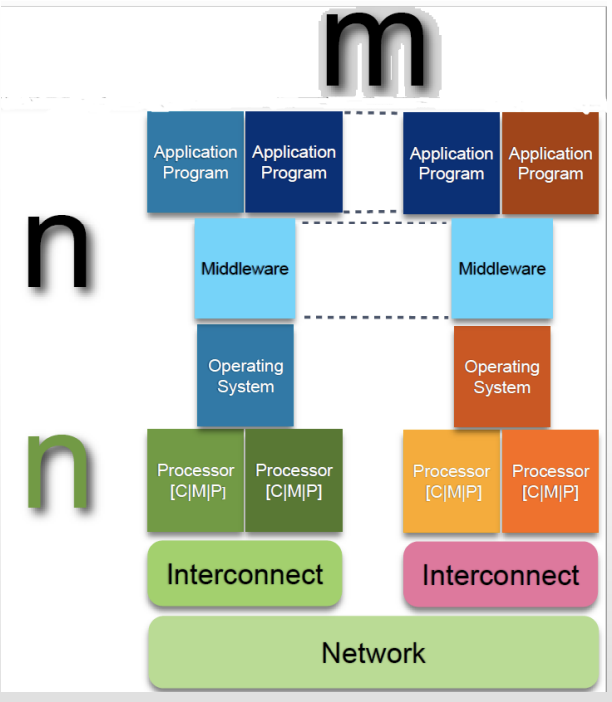

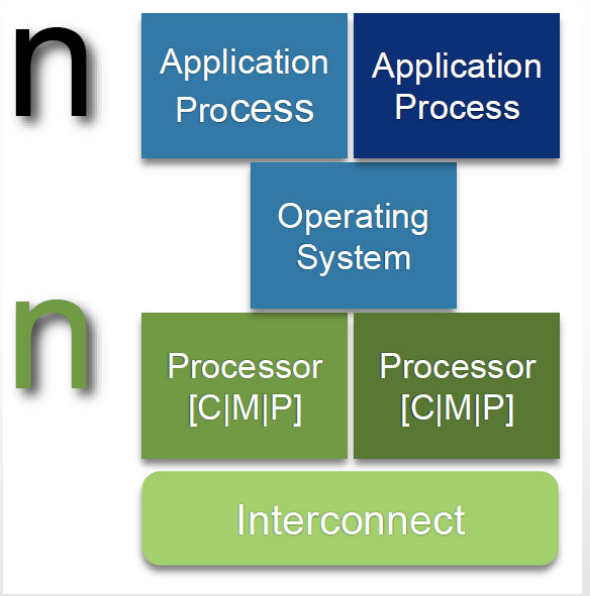

Parallel Computing

many processors bound by and interconnect (eg. a bus)

there is truly many processes running at the same time

Distributed Computing

- many independent, self-sufficient, autonomous, heterogeneous machines

- spatial separation

- message exchange is needed, network effects are felt

- complexity may reach a point in which applications are not written against OS services. Instead, they are written against a middleware API. The middleware then takes some of the complexity upon itself